Citations

This panel presents information regarding the papers that have cited the interatomic potential (IP) whose page you are on.

The OpenKIM machine learning based Deep Citation framework is used to determine whether the citing article actually used the IP in computations (denoted by "USED") or only provides it as a background citation (denoted by "NOT USED"). For more details on Deep Citation and how to work with this panel, click the documentation link at the top of the panel.

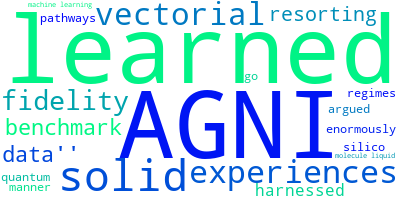

The word cloud to the right is generated from the abstracts of IP principle source(s) (given below in "How to Cite") and the citing articles that were determined to have used the IP in order to provide users with a quick sense of the types of physical phenomena to which this IP is applied.

The bar chart shows the number of articles that cited the IP per year. Each bar is divided into green (articles that USED the IP) and blue (articles that did NOT USE the IP).

Users are encouraged to correct Deep Citation errors in determination by clicking the speech icon next to a citing article and providing updated information. This will be integrated into the next Deep Citation learning cycle, which occurs on a regular basis.

OpenKIM acknowledges the support of the Allen Institute for AI through the Semantic Scholar project for providing citation information and full text of articles when available, which are used to train the Deep Citation ML algorithm.

|

This panel provides information on past usage of this interatomic potential (IP) powered by the OpenKIM Deep Citation framework. The word cloud indicates typical applications of the potential. The bar chart shows citations per year of this IP (bars are divided into articles that used the IP (green) and those that did not (blue)). The complete list of articles that cited this IP is provided below along with the Deep Citation determination on usage. See the Deep Citation documentation for more information.

149 Citations (8 used)

Help us to determine which of the papers that cite this potential actually used it to perform calculations. If you know, click the .

USED (low confidence) J. Tang, G. Li, Q. Wang, J. Zheng, L. Cheng, and R. Guo, “Effect of Four-Phonon Scattering on Anisotropic Thermal Transport in Bulk Hexagonal Boron Nitride by Machine Learning Interatomic Potential,” SSRN Electronic Journal. 2023. link Times cited: 3 USED (low confidence) R. Guo, G. Li, J. Tang, Y. Wang, and X. Song, “Small-data-based Machine Learning Interatomic Potentials for Graphene Grain Boundaries Enabled by Structural Unit Model,” Carbon Trends. 2023. link Times cited: 2 USED (low confidence) M. Shiranirad, C. Burnham, and N. J. English, “Machine-learning-based many-body energy analysis of argon clusters: Fit for size?,” Chemical Physics. 2022. link Times cited: 3 USED (low confidence) G. S. Dhaliwal, P. Nair, and C. V. Singh, “Uncertainty and sensitivity analysis of mechanical and thermal properties computed through Embedded Atom Method potential,” Computational Materials Science. 2019. link Times cited: 9 USED (low confidence) J. Chapman, R. Batra, B. Uberuaga, G. Pilania, and R. Ramprasad, “A comprehensive computational study of adatom diffusion on the aluminum (1 0 0) surface,” Computational Materials Science. 2019. link Times cited: 9 USED (low confidence) Y. Zeng, Q. Li, and K. Bai, “Prediction of interstitial diffusion activation energies of nitrogen, oxygen, boron and carbon in bcc, fcc, and hcp metals using machine learning,” Computational Materials Science. 2018. link Times cited: 34 USED (low confidence) S. Natarajan and J. Behler, “Self-Diffusion of Surface Defects at Copper–Water Interfaces,” Journal of Physical Chemistry C. 2017. link Times cited: 31 Abstract: Solid–liquid interfaces play an important role in many field… read moreAbstract: Solid–liquid interfaces play an important role in many fields like electrochemistry, corrosion, and heterogeneous catalysis. For understanding the related processes, detailed insights into the elementary steps at the atomic level are mandatory. Here we unravel the properties of prototypical surface-defects like adatoms and vacancies at a number of copper–water interfaces including the low-index Cu(111), Cu(100), and Cu(110), as well as the stepped Cu(211) and Cu(311) surfaces. Using a first-principles quality neural network potential constructed from density functional theory reference data in combination with molecular dynamics and metadynamics simulations, we investigate the defect diffusion mechanisms and the associated free energy barriers. Further, the solvent structure and the mobility of the interfacial water molecules close to the defects are analyzed and compared to the defect-free surfaces. We find that, like at the copper–vacuum interface, hopping mechanisms are preferred compared to exchange m... read less USED (low confidence) G. Pilania et al., “Using Machine Learning To Identify Factors That Govern Amorphization of Irradiated Pyrochlores,” Chemistry of Materials. 2016. link Times cited: 31 Abstract: Structure–property relationships are a key materials science… read moreAbstract: Structure–property relationships are a key materials science concept that enables the design of new materials. In the case of materials for application in radiation environments, correlating radiation tolerance with fundamental structural features of a material enables materials discovery. Here, we use a machine learning model to examine the factors that govern amorphization resistance in the complex oxide pyrochlore (A2B2O7) in a regime in which amorphization occurs as a consequence of defect accumulation. We examine the fidelity of predictions based on cation radii and electronegativities, the oxygen positional parameter, and the energetics of disordering and amorphizing the material. No one factor alone adequately predicts amorphization resistance. We find that when multiple families of pyrochlores (with different B cations) are considered, radii and electronegativities provide the best prediction, but when the machine learning model is restricted to only the B = Ti pyrochlores, the energetics of disor... read less NOT USED (low confidence) Y. Yang, B. Xu, and H. Zong, “Physics infused machine learning force fields for 2D materials monolayers,” Journal of Materials Informatics. 2023. link Times cited: 0 Abstract: Large-scale atomistic simulations of two-dimensional (2D) ma… read moreAbstract: Large-scale atomistic simulations of two-dimensional (2D) materials rely on highly accurate and efficient force fields. Here, we present a physics-infused machine learning framework that enables the efficient development and interpretability of interatomic interaction models for 2D materials. By considering the characteristics of chemical bonds and structural topology, we have devised a set of efficient descriptors. This enables accurate force field training using a small dataset. The machine learning force fields show great success in describing the phase transformation and domain switching behaviors of monolayer Group IV monochalcogenides, e.g., GeSe and PbTe. Notably, this type of force field can be readily extended to other non-transition 2D systems, such as hexagonal boron nitride (h BN), leveraging their structural similarity. Our work provides a straightforward but accurate extension of simulation time and length scales for 2D materials. read less NOT USED (low confidence) M. C. Barry, J. R. Gissinger, M. Chandross, K. Wise, S. Kalidindi, and S. Kumar, “Voxelized atomic structure framework for materials design and discovery,” Computational Materials Science. 2023. link Times cited: 0 NOT USED (low confidence) R. Feng et al., “May the Force be with You: Unified Force-Centric Pre-Training for 3D Molecular Conformations,” ArXiv. 2023. link Times cited: 1 Abstract: Recent works have shown the promise of learning pre-trained … read moreAbstract: Recent works have shown the promise of learning pre-trained models for 3D molecular representation. However, existing pre-training models focus predominantly on equilibrium data and largely overlook off-equilibrium conformations. It is challenging to extend these methods to off-equilibrium data because their training objective relies on assumptions of conformations being the local energy minima. We address this gap by proposing a force-centric pretraining model for 3D molecular conformations covering both equilibrium and off-equilibrium data. For off-equilibrium data, our model learns directly from their atomic forces. For equilibrium data, we introduce zero-force regularization and forced-based denoising techniques to approximate near-equilibrium forces. We obtain a unified pre-trained model for 3D molecular representation with over 15 million diverse conformations. Experiments show that, with our pre-training objective, we increase forces accuracy by around 3 times compared to the un-pre-trained Equivariant Transformer model. By incorporating regularizations on equilibrium data, we solved the problem of unstable MD simulations in vanilla Equivariant Transformers, achieving state-of-the-art simulation performance with 2.45 times faster inference time than NequIP. As a powerful molecular encoder, our pre-trained model achieves on-par performance with state-of-the-art property prediction tasks. read less NOT USED (low confidence) J. López-Zorrilla, X. Aretxabaleta, I. W. Yeu, I. Etxebarria, H. Manzano, and N. Artrith, “ænet-PyTorch: A GPU-supported implementation for machine learning atomic potentials training.,” The Journal of chemical physics. 2023. link Times cited: 4 Abstract: In this work, we present ænet-PyTorch, a PyTorch-based imple… read moreAbstract: In this work, we present ænet-PyTorch, a PyTorch-based implementation for training artificial neural network-based machine learning interatomic potentials. Developed as an extension of the atomic energy network (ænet), ænet-PyTorch provides access to all the tools included in ænet for the application and usage of the potentials. The package has been designed as an alternative to the internal training capabilities of ænet, leveraging the power of graphic processing units to facilitate direct training on forces in addition to energies. This leads to a substantial reduction of the training time by one to two orders of magnitude compared to the central processing unit implementation, enabling direct training on forces for systems beyond small molecules. Here, we demonstrate the main features of ænet-PyTorch and show its performance on open databases. Our results show that training on all the force information within a dataset is not necessary, and including between 10% and 20% of the force information is sufficient to achieve optimally accurate interatomic potentials with the least computational resources. read less NOT USED (low confidence) Z. Xiao et al., “Advances and applications of computational simulations in the inhibition of lithium dendrite growth,” Ionics. 2022. link Times cited: 3 NOT USED (low confidence) M.-S. Lee, M. Kim, and K. Min, “Evaluation of Principal Features for Predicting Bulk and Shear Modulus of Inorganic Solids with Machine Learning,” Materials Today Communications. 2022. link Times cited: 3 NOT USED (low confidence) S. Sharma et al., “Machine Learning Methods for Multiscale Physics and Urban Engineering Problems,” Entropy. 2022. link Times cited: 0 Abstract: We present an overview of four challenging research areas in… read moreAbstract: We present an overview of four challenging research areas in multiscale physics and engineering as well as four data science topics that may be developed for addressing these challenges. We focus on multiscale spatiotemporal problems in light of the importance of understanding the accompanying scientific processes and engineering ideas, where “multiscale” refers to concurrent, non-trivial and coupled models over scales separated by orders of magnitude in either space, time, energy, momenta, or any other relevant parameter. Specifically, we consider problems where the data may be obtained at various resolutions; analyzing such data and constructing coupled models led to open research questions in various applications of data science. Numeric studies are reported for one of the data science techniques discussed here for illustration, namely, on approximate Bayesian computations. read less NOT USED (low confidence) J. Fox, B. Zhao, B. G. del Rio, S. Rajamanickam, R. Ramprasad, and L. Song, “Concentric Spherical Neural Network for 3D Representation Learning,” 2022 International Joint Conference on Neural Networks (IJCNN). 2022. link Times cited: 1 Abstract: Learning 3D representations of point clouds that generalize … read moreAbstract: Learning 3D representations of point clouds that generalize well to arbitrary orientations is a challenge of practical importance in domains ranging from computer vision to molecular modeling. The proposed approach uses a concentric spherical spatial representation, formed by nesting spheres discretized the icosahedral grid, as the basis for structured learning over point clouds. We propose rotationally equivariant convolutions for learning over the concentric spherical grid, which are incorporated into a novel architecture for representation learning that is robust to general rotations in 3D. We demonstrate the effectiveness and extensibility of our approach to problems in different domains, such as 3D shape recognition and predicting fundamental properties of molecular systems. read less NOT USED (low confidence) K. Fujioka and R. Sun, “Interpolating Moving Ridge Regression (IMRR): A machine learning algorithm to predict energy gradients for ab initio molecular dynamics simulations,” Chemical Physics. 2022. link Times cited: 3 NOT USED (low confidence) A. Mirzoev, B. Gelchinski, and A. A. Rempel, “Neural Network Prediction of Interatomic Interaction in Multielement Substances and High-Entropy Alloys: A Review,” Doklady Physical Chemistry. 2022. link Times cited: 2 NOT USED (low confidence) C. Zeng, X. Chen, and A. Peterson, “A nearsighted force-training approach to systematically generate training data for the machine learning of large atomic structures.,” The Journal of chemical physics. 2022. link Times cited: 4 Abstract: A challenge of atomistic machine-learning (ML) methods is en… read moreAbstract: A challenge of atomistic machine-learning (ML) methods is ensuring that the training data are suitable for the system being simulated, which is particularly challenging for systems with large numbers of atoms. Most atomistic ML approaches rely on the nearsightedness principle ("all chemistry is local"), using information about the position of an atom's neighbors to predict a per-atom energy. In this work, we develop a framework that exploits the nearsighted nature of ML models to systematically produce an appropriate training set for large structures. We use a per-atom uncertainty estimate to identify the most uncertain atoms and extract chunks centered around these atoms. It is crucial that these small chunks are both large enough to satisfy the ML's nearsighted principle (that is, filling the cutoff radius) and are large enough to be converged with respect to the electronic structure calculation. We present data indicating when the electronic structure calculations are converged with respect to the structure size, which fundamentally limits the accuracy of any nearsighted ML calculator. These new atomic chunks are calculated in electronic structures, and crucially, only a single force-that of the central atom-is added to the growing training set, preventing the noisy and irrelevant information from the piece's boundary from interfering with ML training. The resulting ML potentials are robust, despite requiring single-point calculations on only small reference structures and never seeing large training structures. We demonstrated our approach via structure optimization of a 260-atom structure and extended the approach to clusters with up to 1415 atoms. read less NOT USED (low confidence) X. Zang, Y. Dong, C. Jian, N. Ferralis, and J. Grossman, “Upgrading carbonaceous materials: Coal, tar, pitch, and beyond,” Matter. 2022. link Times cited: 17 NOT USED (low confidence) J. A. Vita and D. Trinkle, “Exploring the necessary complexity of interatomic potentials,” Computational Materials Science. 2021. link Times cited: 8 NOT USED (low confidence) M. A. Chowdhury et al., “Recent machine learning guided material research - A review,” Computational Condensed Matter. 2021. link Times cited: 3 NOT USED (low confidence) L.-Y. Xue et al., “ReaxFF-MPNN machine learning potential: a combination of reactive force field and message passing neural networks.,” Physical chemistry chemical physics : PCCP. 2021. link Times cited: 3 Abstract: Reactive force field (ReaxFF) is a powerful computational to… read moreAbstract: Reactive force field (ReaxFF) is a powerful computational tool for exploring material properties. In this work, we proposed an enhanced reactive force field model, which uses message passing neural networks (MPNN) to compute the bond order and bond energies. MPNN are a variation of graph neural networks (GNN), which are derived from graph theory. In MPNN or GNN, molecular structures are treated as a graph and atoms and chemical bonds are represented by nodes and edges. The edge states correspond to the bond order in ReaxFF and are updated by message functions according to the message passing algorithms. The results are very encouraging; the investigation of the potential, such as the potential energy surface, reaction energies and equation of state, are greatly improved by this simple improvement. The new potential model, called reactive force field with message passing neural networks (ReaxFF-MPNN), is provided as an interface in an atomic simulation environment (ASE) with which the original ReaxFF and ReaxFF-MPNN potential models can do MD simulations and geometry optimizations within the ASE. Furthermore, machine learning, based on an active learning algorithm and gradient optimizer, is designed to train the model. We found that the active learning machine not only saves the manual work to collect the training data but is also much more effective than the general optimizer. read less NOT USED (low confidence) S. K. Achar, L. Zhang, and J. Johnson, “Efficiently Trained Deep Learning Potential for Graphane,” The Journal of Physical Chemistry C. 2021. link Times cited: 12 NOT USED (low confidence) M. Gilbert et al., “Perspectives on multiscale modelling and experiments to accelerate materials development for fusion,” Journal of Nuclear Materials. 2021. link Times cited: 33 NOT USED (low confidence) K. Yang et al., “Self-supervised learning and prediction of microstructure evolution with convolutional recurrent neural networks,” Patterns. 2021. link Times cited: 32 NOT USED (low confidence) K. Fujioka, Y. Luo, and R. Sun, “Active Machine Learning for Chemical Dynamics Simulations. I. Estimating the Energy Gradient,” ChemRxiv. 2021. link Times cited: 0 Abstract: Ab initio molecular dymamics (AIMD) simulation studies are a… read moreAbstract: Ab initio molecular dymamics (AIMD) simulation studies are a direct

way to visualize chemical reactions and help elucidate non-statistical dynamics that does not follow the intrinsic reaction coordinate. However,

due to the enormous amount of the ab initio energy gradient calculations

needed for AIMD, it has been largely restrained to limited sampling and

low level of theory (i.e., density functional theory with small basis sets).

To overcome this issue, a number of machine learning (ML) methods have

been employed to predict the energy gradient of the system of interest.

In this manuscript, we outline the theoretical foundations of a novel ML

method which trains from a varying set of atomic positions and their

energy gradients, called interpolating moving ridge regression (IMRR),

and directly predicts the energy gradient of a new set of atomic positions.

Several key theoretical findings are presented regarding the inputs used to

train IMRR and the predicted energy gradient. A hyperparameter used to

guide IMRR is rigorously examined as well. The method is then applied to

three bimolecular reactions studied with AIMD, including HBr+ + CO2,

H2S + CH, and C4H2 + CH, to demonstrate IMRR’s performance on different chemical systems of different sizes. This manuscript also compares

the computational cost of the energy gradient calculation with IMRR vs.

ab initio, and the results highlight IMRR as a viable option to greatly

increase the efficiency of AIMD. read less NOT USED (low confidence) Y. Choi et al., “CHARMM-GUI Polymer Builder for Modeling and Simulation of Synthetic Polymers.,” Journal of chemical theory and computation. 2021. link Times cited: 48 Abstract: Molecular modeling and simulations are invaluable tools for … read moreAbstract: Molecular modeling and simulations are invaluable tools for polymer science and engineering, which predict physicochemical properties of polymers and provide molecular-level insight into the underlying mechanisms. However, building realistic polymer systems is challenging and requires considerable experience because of great variations in structures as well as length and time scales. This work describes Polymer Builder in CHARMM-GUI (http://www.charmm-gui.org/input/polymer), a web-based infrastructure that provides a generalized and automated process to build a relaxed polymer system. Polymer Builder not only provides versatile modeling methods to build complex polymer structures, but also generates realistic polymer melt and solution systems through the built-in coarse-grained model and all-atom replacement. The coarse-grained model parametrization is generalized and extensively validated with various experimental data and all-atom simulations. In addition, the capability of Polymer Builder for generating relaxed polymer systems is demonstrated by density calculations of 34 homopolymer melt systems, characteristic ratio calculations of 170 homopolymer melt systems, a morphology diagram of poly(styrene-b-methyl methacrylate) block copolymers, and self-assembly behavior of amphiphilic poly(ethylene oxide-b-ethylethane) block copolymers in water. We hope that Polymer Builder is useful to carry out innovative and novel polymer modeling and simulation research to acquire insight into structures, dynamics, and underlying mechanisms of complex polymer-containing systems. read less NOT USED (low confidence) F. Musil, A. Grisafi, A. P. Bart’ok, C. Ortner, G. Csányi, and M. Ceriotti, “Physics-Inspired Structural Representations for Molecules and Materials.,” Chemical reviews. 2021. link Times cited: 210 Abstract: The first step in the construction of a regression model or … read moreAbstract: The first step in the construction of a regression model or a data-driven analysis, aiming to predict or elucidate the relationship between the atomic-scale structure of matter and its properties, involves transforming the Cartesian coordinates of the atoms into a suitable representation. The development of atomic-scale representations has played, and continues to play, a central role in the success of machine-learning methods for chemistry and materials science. This review summarizes the current understanding of the nature and characteristics of the most commonly used structural and chemical descriptions of atomistic structures, highlighting the deep underlying connections between different frameworks and the ideas that lead to computationally efficient and universally applicable models. It emphasizes the link between properties, structures, their physical chemistry, and their mathematical description, provides examples of recent applications to a diverse set of chemical and materials science problems, and outlines the open questions and the most promising research directions in the field. read less NOT USED (low confidence) J. Ding et al., “Machine learning for molecular thermodynamics,” Chinese Journal of Chemical Engineering. 2021. link Times cited: 16 NOT USED (low confidence) B. G. del Rio, C. Kuenneth, H. Tran, and R. Ramprasad, “An Efficient Deep Learning Scheme To Predict the Electronic Structure of Materials and Molecules: The Example of Graphene-Derived Allotropes.,” The journal of physical chemistry. A. 2020. link Times cited: 12 Abstract: Computations based on density functional theory (DFT) are tr… read moreAbstract: Computations based on density functional theory (DFT) are transforming various aspects of materials research and discovery. However, the effort required to solve the central equation of DFT, namely the Kohn-Sham equation, which remains a major obstacle for studying large systems with hundreds of atoms in a practical amount of time with routine computational resources. Here, we propose a deep learning architecture that systematically learns the input-output behavior of the Kohn-Sham equation and predicts the electronic density of states, a primary output of DFT calculations, with unprecedented speed and chemical accuracy. The algorithm also adapts and progressively improves in predictive power and versatility as it is exposed to new diverse atomic configurations. We demonstrate this capability for a diverse set of carbon allotropes spanning a large configurational and phase space. The electronic density of states, along with the electronic charge density, may be used downstream to predict a variety of materials properties, bypassing the Kohn-Sham equation, leading to an ultrafast and high-fidelity DFT emulator. read less NOT USED (low confidence) S. A. Etesami, M. Laradji, and E. Asadi, “Reliability of molecular dynamics interatomic potentials for modeling of titanium in additive manufacturing processes,” Computational Materials Science. 2020. link Times cited: 5 NOT USED (low confidence) W. Li, Y. Ando, and S. Watanabe, “Effects of density and composition on the properties of amorphous alumina: A high-dimensional neural network potential study.,” The Journal of chemical physics. 2020. link Times cited: 5 Abstract: Amorphous alumina (a-AlOx), which plays important roles in s… read moreAbstract: Amorphous alumina (a-AlOx), which plays important roles in several technological fields, shows a wide variation of density and composition. However, their influences on the properties of a-AlOx have rarely been investigated from a theoretical perspective. In this study, high-dimensional neural network potentials were constructed to generate a series of atomic structures of a-AlOx with different densities (2.6 g/cm3-3.3 g/cm3) and O/Al ratios (1.0-1.75). The structural, vibrational, mechanical, and thermal properties of the a-AlOx models were investigated, as well as the Li and Cu diffusion behavior in the models. The results showed that density and composition had different degrees of effects on the different properties. The structural and vibrational properties were strongly affected by composition, whereas the mechanical properties were mainly determined by density. The thermal conductivity was affected by both the density and composition of a-AlOx. However, the effects on the Li and Cu diffusion behavior were relatively unclear. read less NOT USED (low confidence) M. C. Barry, K. Wise, S. Kalidindi, and S. Kumar, “Voxelized Atomic Structure Potentials: Predicting Atomic Forces with the Accuracy of Quantum Mechanics Using Convolutional Neural Networks.,” The journal of physical chemistry letters. 2020. link Times cited: 9 Abstract: This paper introduces Voxelized Atomic Structure (VASt) pote… read moreAbstract: This paper introduces Voxelized Atomic Structure (VASt) potentials as a machine learning (ML) framework for developing interatomic potentials. The VASt framework utilizes a voxelized representation of the atomic structure directly as the input to a convolutional neural network (CNN). This allows for high fidelity representations of highly complex and diverse spatial arrangements of the atomic environments of interest. The CNN implicitly establishes the low-dimensional features needed to correlate each atomic neighborhood to its net atomic force. The selection of the salient features of the atomic structure (i.e., feature engineering) in the VASt framework is implicit, comprehensive, automated, scalable, and highly efficient. The calibrated convolutional layers learn the complex spatial relationships and multibody interactions that govern the physics of atomic systems with remarkable fidelity. We show that VASt potentials predict highly accurate forces on two phases of silicon carbide and the thermal conductivity of silicon over a range of isotropic strain. read less NOT USED (low confidence) J. P. Allers, J. A. Harvey, F. Garzon, and T. Alam, “Machine learning prediction of self-diffusion in Lennard-Jones fluids.,” The Journal of chemical physics. 2020. link Times cited: 32 Abstract: Different machine learning (ML) methods were explored for th… read moreAbstract: Different machine learning (ML) methods were explored for the prediction of self-diffusion in Lennard-Jones (LJ) fluids. Using a database of diffusion constants obtained from the molecular dynamics simulation literature, multiple Random Forest (RF) and Artificial Neural Net (ANN) regression models were developed and characterized. The role and improved performance of feature engineering coupled to the RF model development was also addressed. The performance of these different ML models was evaluated by comparing the prediction error to an existing empirical relationship used to describe LJ fluid diffusion. It was found that the ANN regression models provided superior prediction of diffusion in comparison to the existing empirical relationships. read less NOT USED (low confidence) P. O. Dral, A. Owens, A. Dral, and G. Csányi, “Hierarchical machine learning of potential energy surfaces.,” The Journal of chemical physics. 2020. link Times cited: 49 Abstract: We present hierarchical machine learning (hML) of highly acc… read moreAbstract: We present hierarchical machine learning (hML) of highly accurate potential energy surfaces (PESs). Our scheme is based on adding predictions of multiple Δ-machine learning models trained on energies and energy corrections calculated with a hierarchy of quantum chemical methods. Our (semi-)automatic procedure determines the optimal training set size and composition of each constituent machine learning model, simultaneously minimizing the computational effort necessary to achieve the required accuracy of the hML PES. Machine learning models are built using kernel ridge regression, and training points are selected with structure-based sampling. As an illustrative example, hML is applied to a high-level ab initio CH3Cl PES and is shown to significantly reduce the computational cost of generating the PES by a factor of 100 while retaining similar levels of accuracy (errors of ∼1 cm-1). read less NOT USED (low confidence) P. Pattnaik, S. Raghunathan, T. Kalluri, P. Bhimalapuram, C. V. Jawahar, and U. Priyakumar, “Machine Learning for Accurate Force Calculations in Molecular Dynamics Simulations.,” The journal of physical chemistry. A. 2020. link Times cited: 32 Abstract: The computationally expensive nature of ab initio molecular … read moreAbstract: The computationally expensive nature of ab initio molecular dynamics simulations severely limits its ability to simulate large system sizes and long time scales, both of which are necessary to imitate experimental conditions. In this work, we explore an approach to make use of the data obtained using the quantum mechanical density functional theory (DFT) on small systems and use deep learning to subsequently simulate large systems by taking liquid argon as a test case. A suitable vector representation was chosen to represent the surrounding environment of each Ar atom, and a ΔNetFF machine learning model where, the neural network was trained to predict the difference in resultant forces obtained by DFT and classical force fields was introduced. Molecular dynamics simulations were then performed using forces from the neural network for various system sizes and time scales depending on the properties we calculated. A comparison of properties obtained from the classical force field and the neural network model was presented alongside available experimental data to validate the proposed method. read less NOT USED (low confidence) M. Hodapp and A. Shapeev, “In operando active learning of interatomic interaction during large-scale simulations,” Machine Learning: Science and Technology. 2020. link Times cited: 17 Abstract: A well-known drawback of state-of-the-art machine-learning i… read moreAbstract: A well-known drawback of state-of-the-art machine-learning interatomic potentials is their poor ability to extrapolate beyond the training domain. For small-scale problems with tens to hundreds of atoms this can be solved by using active learning which is able to select atomic configurations on which a potential attempts extrapolation and add them to the ab initio-computed training set. In this sense an active learning algorithm can be viewed as an on-the-fly interpolation of an ab initio model. For large-scale problems, possibly involving tens of thousands of atoms, this is not feasible because one cannot afford even a single density functional theory (DFT) computation with such a large number of atoms. This work marks a new milestone toward fully automatic ab initio-accurate large-scale atomistic simulations. We develop an active learning algorithm that identifies local subregions of the simulation region where the potential extrapolates. Then the algorithm constructs periodic configurations out of these local, non-periodic subregions, sufficiently small to be computable with plane-wave DFT codes, in order to obtain accurate ab initio energies. We benchmark our algorithm on the problem of screw dislocation motion in bcc tungsten and show that our algorithm reaches ab initio accuracy, down to typical magnitudes of numerical noise in DFT codes. We show that our algorithm reproduces material properties such as core structure, Peierls barrier, and Peierls stress. This unleashes new capabilities for computational materials science toward applications which have currently been out of scope if approached solely by ab initio methods. read less NOT USED (low confidence) D. Kamal, A. Chandrasekaran, R. Batra, and R. Ramprasad, “A charge density prediction model for hydrocarbons using deep neural networks,” Machine Learning: Science and Technology. 2020. link Times cited: 17 Abstract: The electronic charge density distribution ρ(r) of a given m… read moreAbstract: The electronic charge density distribution ρ(r) of a given material is among the most fundamental quantities in quantum simulations from which many large scale properties and observables can be calculated. Conventionally, ρ(r) is obtained using Kohn–Sham density functional theory (KS-DFT) based methods. But, the high computational cost of KS-DFT renders it intractable for systems involving thousands/millions of atoms. Thus, recently there has been efforts to bypass expensive KS equations, and directly predict ρ(r) using machine learning (ML) based methods. Here, we build upon one such scheme to create a robust and reliable ρ(r) prediction model for a diverse set of hydrocarbons, involving huge chemical and morphological complexity /(saturated, unsaturated molecules, cyclo-groups and amorphous and semi-crystalline polymers). We utilize a grid-based fingerprint to capture the atomic neighborhood around an arbitrary point in space, and map it to the reference ρ(r) obtained from standard DFT calculations at that point. Owing to the grid-based learning, dataset sizes exceed billions of points, which is trained using deep neural networks in conjunction with a incremental learning based approach. The accuracy and transferability of the ML approach is demonstrated on not only a diverse test set, but also on a completely unseen system of polystyrene under different strains. Finally, we note that the general approach adopted here could be easily extended to other material systems, and can be used for quick and accurate determination of ρ(r) for DFT charge density initialization, computing dipole or quadrupole, and other observables for which reliable density functional are known. read less NOT USED (low confidence) G. Pilania, P. Balachandran, J. Gubernatis, and T. Lookman, “Data-Based Methods for Materials Design and Discovery: Basic Ideas and General Methods.” 2020. link Times cited: 11 Abstract: Machine learning methods are changing the way we design and … read moreAbstract: Machine learning methods are changing the way we design and discover new materials. This book provides an overview of approaches successfully used in addressing materials problems (alloys,... read less NOT USED (low confidence) M. E. Khatib and W. A. Jong, “ML4Chem: A Machine Learning Package for Chemistry and Materials Science,” ArXiv. 2020. link Times cited: 3 Abstract: ML4Chem is an open-source machine learning library for chemi… read moreAbstract: ML4Chem is an open-source machine learning library for chemistry and materials science. It

provides an extendable platform to develop and deploy machine learning models and pipelines and

is targeted to the non-expert and expert users. ML4Chem follows user-experience design and offers

the needed tools to go from data preparation to inference. Here we introduce its atomistic module

for the implementation, deployment, and reproducibility of atom-centered models. This module is

composed of six core building blocks: data, featurization, models, model optimization, inference,

and visualization. We present their functionality and ease of use with demonstrations utilizing